Blackmagic Design has published a very comprehensive 156-page camera guide for the new URSA Cine Immersive Camera. As this is a brand new form of technology, there is a pretty steep learning curve for working out how to use and then deal with the recorded footage in post-production.

The guide is designed to be an introduction to Blackmagic URSA Cine Immersive and how to shoot with it, focusing on the camera’s basic operation and the unique format that is designed to deliver Apple Immersive Video. An understanding of the format will help you to better

understand the camera itself. There is a mix of technical and creative advice that will help you not just to use the camera, but to understand the benefits of the immersive format.

It’s good to see Blackmagic Design publish a detailed operating guide that helps to desmystify a lot of the questions potential users of the camera may have.

Blackmagic URSA Cine Immersive is designed to shoot 180-degree stereoscopic VR footage, which is commonly called immersive video. In particular, it has been optimized to shoot Apple Immersive Video for the Apple Vision Pro, the highest quality Immersive experience currently

available.

What is Immersive?

Immersive is a three-dimensional spatial video format. You’re likely aware that other similar technologies have existed for some time. To understand what makes immersive different, here’s a broad overview of other technologies:

Traditional 3D Cinema allows the audience to experience depth within the frame by capturing each scene with two cameras with an interaxial distance that mimics the distance between human eyes. While stereoscopic images allow the audience to experience depth, the audience’s perspective is controlled by the cinematographer’s composition.

Spherical Video combines the principles of 3D and 360-degree cameras to allow audiences to look in any direction while experiencing depth. 360-degree images require very high resolutions to ensure the small portion of the image that the audience is looking at has sufficient fidelity. As human physiology only allows us to turn our head from shoulder to shoulder, to be able to look behind, the audience is typically standing or seated on a swivel chair.

Volumetric Video allows the audience not just to experience depth and look around, but to move and change their perspective. While camera arrays can capture volumetric video, most virtual reality or augmented reality makes use of dynamically rendered 3D assets and requires a safe environment for participants.

Immersive combines the best of traditional cinema and 3D interactive formats. In its simplest form, it is a high frame rate, high resolution 180-degree stereoscopic format. Blackmagic URSA Cine Immersive is designed specifically to capture Apple Immersive Video. This format is used with Apple Vision Pro and supports high-resolution, stereoscopic 3D video

in a 180-degree field of view.

Apple Immersive Video has the following features:

- 90 frames per second playback.

- Can be distributed at resolutions up to 4320 x 4320 per eye.

- A unique metadata system for mapping the original camera image into the 180 environment.

- Apple Spatial Audio Format to provide 3D sound.

Apple Immersive Video introduces a new approach to visual storytelling and offers an alternative to traditional 2D cinema. It is not intended as a replacement but as an additional format that can be selected based on the needs of the project. As with any format, creative choices will vary depending on the story being told.

While new formats often involve complex workflows, URSA Cine Immersive is designed to simplify the process of capturing Apple Immersive Video. It provides a straightforward production path for immersive content and may also be used for visual effects and other applications.

Apple Immersive Video requires hardware capable of high-resolution stereoscopic 180-degree playback. Apple Vision Pro is currently the only device that supports this format.

Apple Vision Pro

The headset features dual 3660 x 3200 pixel micro OLED displays with a 7.5 micron pixel pitch. It includes 13 tracking cameras, a LiDAR scanner, internal measurement units, and eye tracking sensors to align imagery with head and eye movement. An M2 processor with an 8-core CPU and 10-core GPU drives playback at up to 100 frames per second.

URSA Cine Immersive

The URSA Cine Immersive uses two matched 58 megapixel RGBW sensors. The sensors are nearly square to capture a full 180-degree field of view, both horizontally and vertically. Each sensor is paired with a 210-degree fisheye lens. The lens centers are placed 64mm apart.

Built-in ND filters provide up to 8 stops of light control. The lens aperture is fixed at approximately f4.5.

The camera records two 8K image streams at 90 frames per second. This results in significantly higher data rates than standard 4K 24p recording.

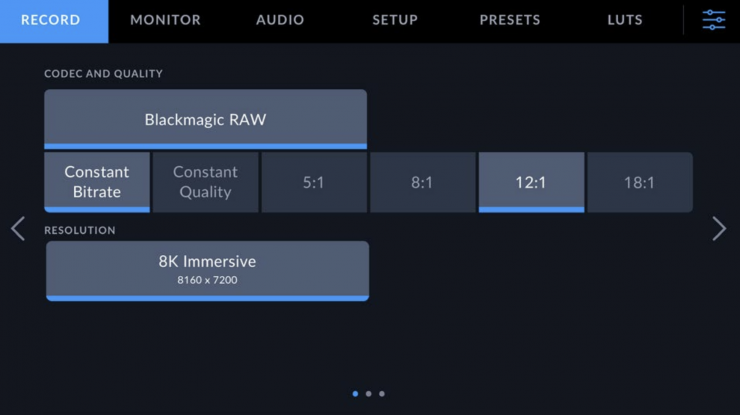

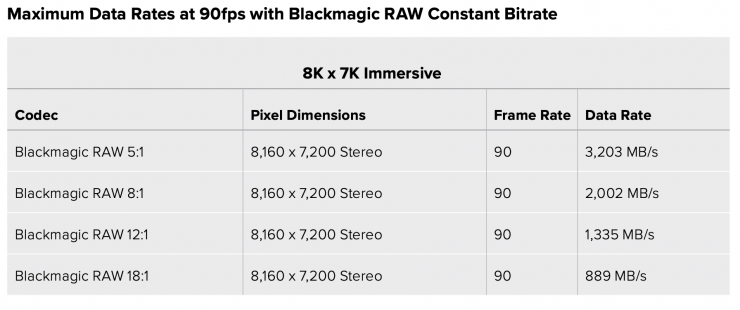

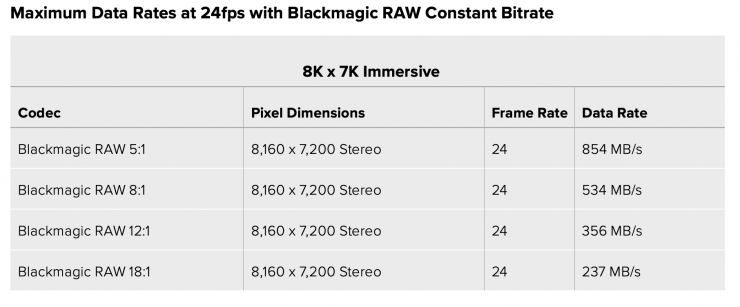

The camera records in the Blackmagic RAW codec. The options are divided between four ‘constant bitrate’ and ‘constant quality’ settings. Blackmagic Design highly recommends using 12:1 or Q3 compression settings if recording 90fps content for Apple Vision Pro. These settings provide the sweet spot for maintaining a manageable data rate and file sizes, with current computing hardware, while still retaining extremely high image quality.

The tables above contain data rates for 8K x 7K immersive content at 90 frames per second and 24 frames per second.

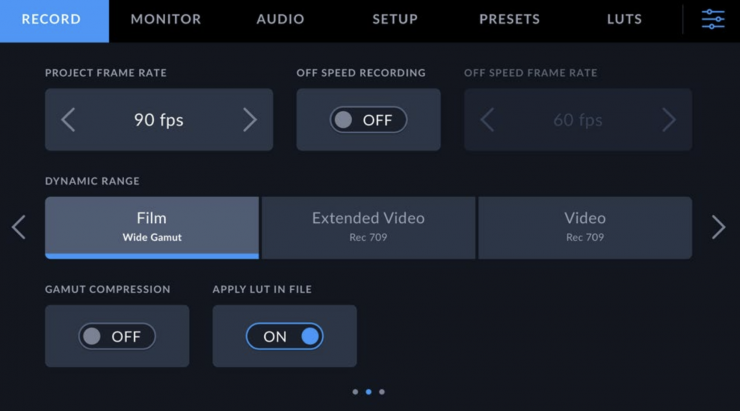

The camera’s project frame rate should be set to 90 frames per second to conform to Apple Vision Pro’s display frame rate.

If you are shooting for other systems, lower frame rates such as 60fps or 50fps may be sufficient. Lower traditional film style capture frame rates, such as 24fps or 25fps, may be appropriate if you are shooting visual effects plates for 24p motion picture film content.

Immersive and traditional 2D content shares a lot in common, but there are also distinct differences. For example, have you ever gotten motion sickness in a car because you’re too busy scrolling on your phone? It is caused by a sensory mismatch when your brain receives conflicting signals.

Your inner ear is telling your body you are moving, but your eyes are telling it you are stationary. It’s easy to produce a similar experience with immersive. For example, if you pan the camera, your audience’s eyes will tell them they are moving, but their neck and inner ear will tell them they are very much stationary. As a result, it’s best to master stationary shots before learning how to make camera movement pleasant for your audience.

Most immersive shots are captured with a zero-degree, or near-zero-degree tilt. As the camera captures a 180+ degree field of view, both horizontally and vertically, the audience is still able to look up and down. But instead of the filmmaker forcing the audience to look in a particular direction, the audience is in control.

To encourage the audience to look at different parts of your image, you can use other techniques like lighting, aperture framing, and leading lines.

Apple Immersive Video has higher exposure requirements than traditional 24 fps capture and might require you to rethink your shooting and lighting approach. If you maintain a 180-degree shutter, 90 fps capture requires almost four times more light to expose to the same amount.

In addition, the fixed iris of the lenses, which is around f4.5, is up to three stops darker than typical super speed lenses. This means you might need up to seven stops more light to achieve adequate exposure. However, if you are shooting at 90fps, using a wide shutter angle between 270º and 360º can help to compensate for high frame rate light loss while still achieving pleasing amounts of motion blur.

Shutter speed has less of an impact on the appearance of high frame immersive content. While a 180-degree shutter is still favored by many for shooting at 24 or 30p, it’s more common to see shutter speed used to aid exposure when shooting for immersive.

When shooting in low light, the shutter can be increased to 270 degrees or, in some exceptional circumstances, 360 degrees. When shooting in bright conditions, the shutter speed can be lowered as far as 11.2 degrees. However, in bright conditions, it is more common to leave the shutter speed at your preferred setting for motion and use ND filters to control exposure.

It’s worth noting that Apple Immersive Video’s high frame rate of 90 frames per second can cause issues when shooting with flickering lights. A 270-degree shutter is suggested when working in 60Hz environments, and a 324-degree shutter is recommended when working in 50Hz environments.

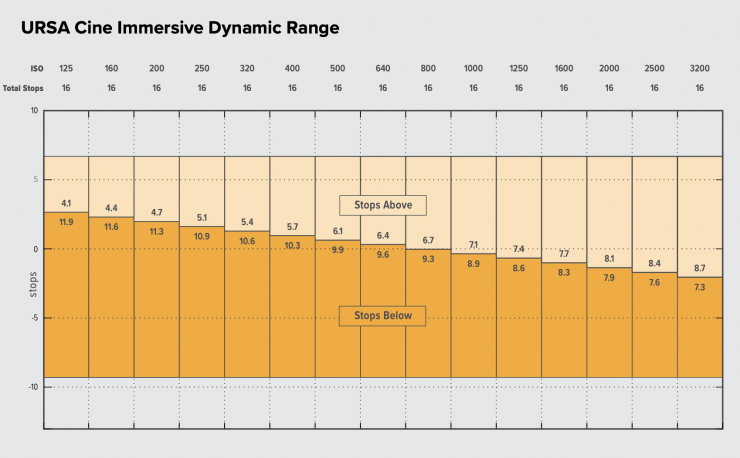

The ISO range for the URSA Cine Immersive is from ISO 200 to 3200. The optimum ISO is 800. Depending on your situation, you may choose a lower or higher ISO setting. For example, in low light conditions, ISO 1600 can be suitable but may introduce some visible noise. In bright conditions, ISO 200 can provide richer colors.